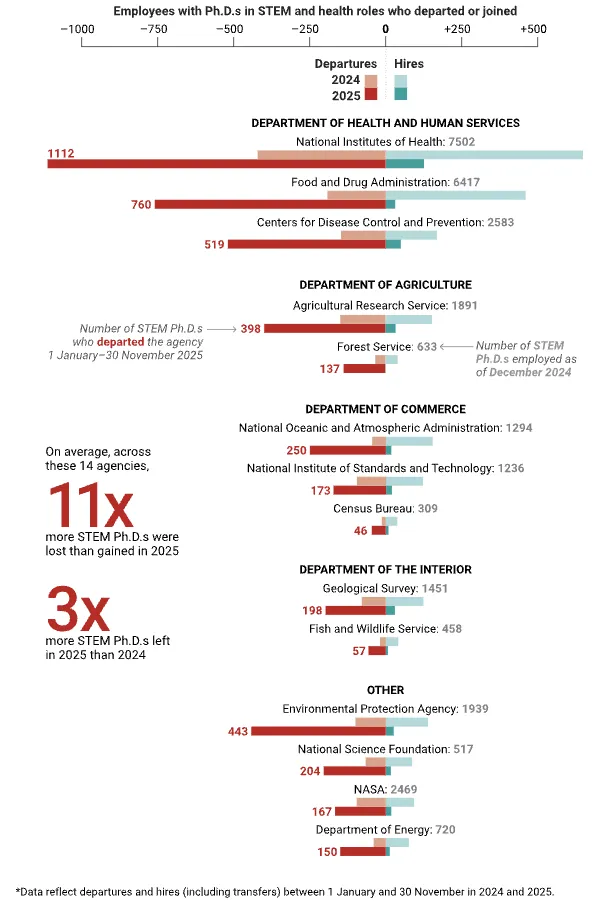

When I see examples of ChatGPT in action, I am reminded of the answers that college students provide on test questions. Yesterday, I finally got around to asking my first Chat question. I decided to test the famous AI with a question that students usually get wrong. Chat got the question wrong. (And no, the “ceteris paribus” qualifier doesn’t help, at all.) I then did a follow-up in the hope that clarifying the question would nudge Chat in the right direction.

As you can see, on the second question Chat is hopelessly confused (if you’ll excuse me for anthropomorphizing a machine.). Chat has some ideas about price and quantity demanded, price and quantity supplied, and the concept of equilibrium, but doesn’t know how to put them together in a coherent fashion. In other words, Chat is a B student in a college economics course.

This post is not about ChatGPT. This new technology is actually quite impressive, and it seems likely that future versions will be even more impressive. Rather this post is about the state of economics.

Suppose I claimed that “reasoning from a price change” is very widespread in the field of economics. How could I justify this claim to a doubter? I suppose I could dig up an example of reasoning from a price change in a news article, or even an academic journal. But that would represent merely a single anecdote, not proof of a widespread problem.

ChatGPT formulates answers by searching over a vast field of economics documents. Thus its answers in some sense represent the consensus view of people who write books and articles on the subject of economics. I’ve come to believe that most people don’t actually understand supply and demand, and the Chat response reinforces this view.

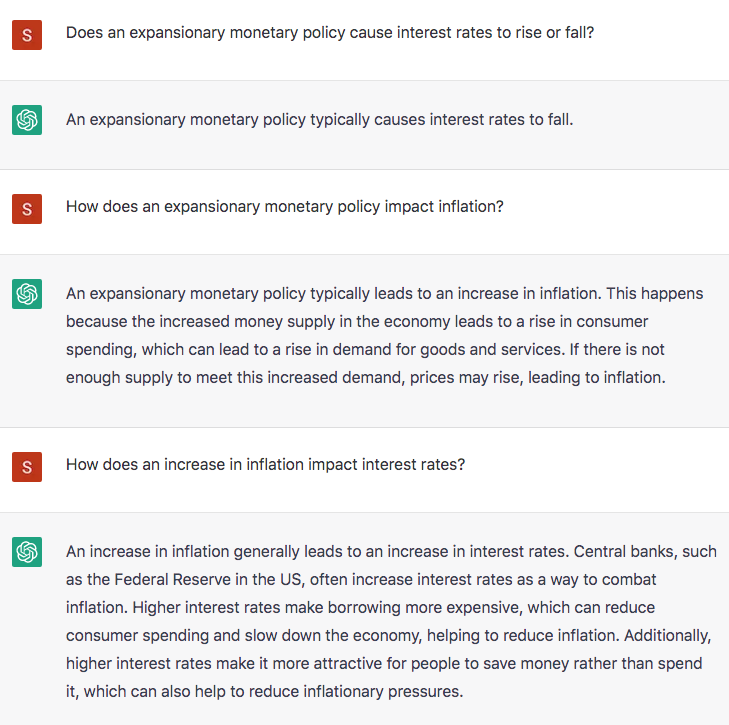

If I am correct, if Chat is like a mirror that reflects both the strengths and weaknesses of our understanding of economics, then I should be able to predict its failures. And I believe I can do so. I followed up my “reasoning from a price change” question with a set of questions aimed at exposing our weak understanding of the relationship between monetary policy and interest rates:

See how easy it is to trick ChatGPT? After 35 years of teaching thousands of students, I can predict how a typical student would answer the three questions above. I know that their answers will not be entirely consistent. And it does no good to claim there’s a grain of truth in each answer. There is. But ChatGPT doesn’t just give yes and no answers; it tries to explain the various possibilities. Is this string of answers likely to be helpful to a college student? Or would it further confuse them? If Chat actually “understood” this stuff, it would give an answer pointing to the complexity of this issue.

Again, this post is not about AI. Others have documented the fact that Chat often gives inadequate answers. That’s not what interests me. Rather I see Chat as a useful tool for diagnosing weaknesses in the field of economics. It allows us to peer into the collective brain of society.

The questions that Chat gets wrong are the questions that most of our students get wrong. Indeed, even many economists are too quick to equate falling interest rates with an expansionary monetary policy. ChatGPT points to the areas where our educational system needs to do better.

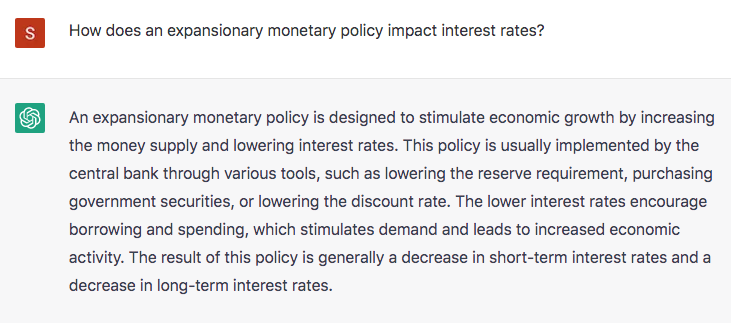

PS. You might argue that in my first monetary question I forced Chat to pick one direction of change. But when the question is more open-ended, the answer is arguably even worse:

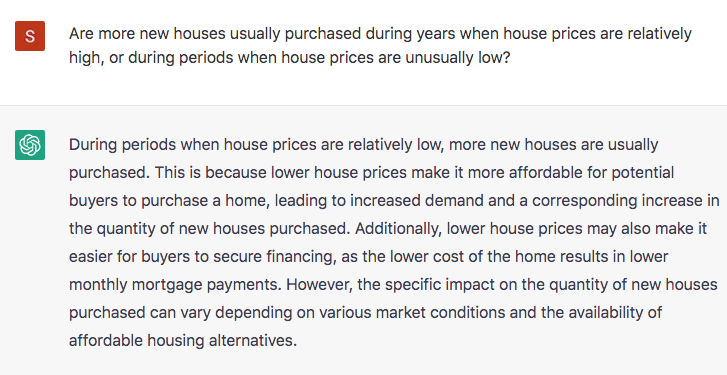

PPS. In the oil market example, one could argue that Chat is reacting to the fact that the oil market is dominated by supply shocks, making the claim “usually true”. But it gives the same answer when confronted with a market dominated by demand shocks, where the claim is usually false:

This gives us insight into how Chat thinks. It does not search the time series data (as I would), looking for whether more new houses are sold during periods of high or low prices, rather it relies on theory (albeit a false understanding of the supply and demand theory.)